Difference between revisions of "2494: Flawed Data"

(→Explanation) |

(→Explanation: Nudging things around) |

||

| Line 11: | Line 11: | ||

This is another comic about what is the right or wrong way to perform research when your data is not adequate. | This is another comic about what is the right or wrong way to perform research when your data is not adequate. | ||

| − | This time we see [[Cueball]] clearly admit that they have realized that all of their data is actually flawed. He presents them on a poster with two graphs with data point and possible fitted curves in the first panel. | + | This time we see [[Cueball]] clearly admit that they have realized that all of their data is actually flawed, though he doesn't explain if it's contrary to outcome, some revelation - perhaps a systematic error in the data-gathering process - has been revealed or thought to be revealed. He presents them on a poster with two graphs with data point and possible fitted curves in the first panel. |

From there three different reactions to this is displayed in order of how good a decision they make based on this realization. | From there three different reactions to this is displayed in order of how good a decision they make based on this realization. | ||

| Line 19: | Line 19: | ||

;Bad | ;Bad | ||

| − | In the second scenario Cueball then explains that after doing a lot of math (manipulation) of their flawed data, they decided they where actually fine. Since the data is flawed, math will not make them true. | + | In the second scenario Cueball then explains that after doing a lot of math (manipulation) of their flawed data, they decided they where actually fine. Since the data is flawed, math will not make them true, although if the flaws can be (correctly) accounted for and what is left remains sufficiently supportive then it could save the day. Merely massaging the data with lots of math is a dubious approach, however, if it can't be independently justified. Trying to find reasons why their bad data is actually correct, or pruning 'bad' elements equally to maintain the status quo effectively enforces any biases to support the model and expected outcome so that the 'cleaned' data also fits well - but just as erroneous. While statistical analysis can be used to discard "flaws", e.g. outliers in a data set or establish lower expectations of accuracy in certain 'streams of proof, it is not vaild to do this after the results didn't match your expectations. Since there are many different statistical methods and tests, trying one after the other and post-hoc selecting the one(s) more useful to you could almost guarantee that you will eventually confirm the outcome you are after - even/especially if the data was unreliable. |

;Very bad | ;Very bad | ||

| − | In the third and final scenario Cueball explains that they scrapped all the flawed data. But in stead of trying to make some new data | + | In the third and final scenario Cueball explains that they scrapped all the flawed data. But in the stead of trying to make some new data by correcy redoing research/measurements/tests, they instead trained an {{w|Artificial Intelligence}} (AI) to generate better data from nothing but a desire to match a target outcome. This is of course not real data, but just a simulation of data, selectively sieving statistical noise for desirable qualities. And since they are probably looking for a specific result, they are training the AI to generate data that supports this. This has nothing to do with research into the problem they are actually looking into and is thus very bad. They may gain some insights into programing the AI (see [[2173: Trained a Neural Net]]), however. AI is a recurring [[:Category:Artificial Intelligence|theme]] on xkcd. |

In the title text the results from the very bad approach is mentioned and the fact that they got the data they where looking for made clear when they state that ''We trained it to produce data that looked convincing, and we have to admit the results look convincing!'' So of course if they successfully ask the AI for data that supports their theory, in a way that looks convincing, that would be what they got back. | In the title text the results from the very bad approach is mentioned and the fact that they got the data they where looking for made clear when they state that ''We trained it to produce data that looked convincing, and we have to admit the results look convincing!'' So of course if they successfully ask the AI for data that supports their theory, in a way that looks convincing, that would be what they got back. | ||

Revision as of 12:23, 27 July 2021

| Flawed Data |

Title text: We trained it to produce data that looked convincing, and we have to admit the results look convincing! |

Explanation

| |

This explanation may be incomplete or incorrect: Created by a flawed but CONVINCING AI. Please mention here why this explanation isn't complete. Do NOT delete this tag too soon. If you can address this issue, please edit the page! Thanks. |

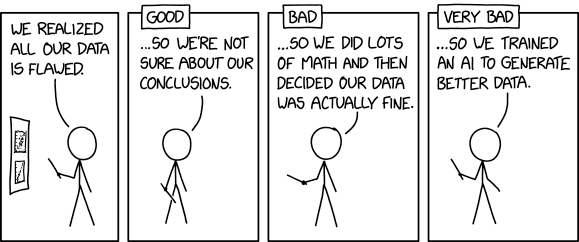

This is another comic about what is the right or wrong way to perform research when your data is not adequate.

This time we see Cueball clearly admit that they have realized that all of their data is actually flawed, though he doesn't explain if it's contrary to outcome, some revelation - perhaps a systematic error in the data-gathering process - has been revealed or thought to be revealed. He presents them on a poster with two graphs with data point and possible fitted curves in the first panel.

From there three different reactions to this is displayed in order of how good a decision they make based on this realization.

- Good

In the first scenario Cueball then admit that they are no longer sure about the conclusions they had drawn out from these flawed data. That is, they cannot really make any conclusions, which is the right (good) decision when realizing that the data you have is not valid.

- Bad

In the second scenario Cueball then explains that after doing a lot of math (manipulation) of their flawed data, they decided they where actually fine. Since the data is flawed, math will not make them true, although if the flaws can be (correctly) accounted for and what is left remains sufficiently supportive then it could save the day. Merely massaging the data with lots of math is a dubious approach, however, if it can't be independently justified. Trying to find reasons why their bad data is actually correct, or pruning 'bad' elements equally to maintain the status quo effectively enforces any biases to support the model and expected outcome so that the 'cleaned' data also fits well - but just as erroneous. While statistical analysis can be used to discard "flaws", e.g. outliers in a data set or establish lower expectations of accuracy in certain 'streams of proof, it is not vaild to do this after the results didn't match your expectations. Since there are many different statistical methods and tests, trying one after the other and post-hoc selecting the one(s) more useful to you could almost guarantee that you will eventually confirm the outcome you are after - even/especially if the data was unreliable.

- Very bad

In the third and final scenario Cueball explains that they scrapped all the flawed data. But in the stead of trying to make some new data by correcy redoing research/measurements/tests, they instead trained an Artificial Intelligence (AI) to generate better data from nothing but a desire to match a target outcome. This is of course not real data, but just a simulation of data, selectively sieving statistical noise for desirable qualities. And since they are probably looking for a specific result, they are training the AI to generate data that supports this. This has nothing to do with research into the problem they are actually looking into and is thus very bad. They may gain some insights into programing the AI (see 2173: Trained a Neural Net), however. AI is a recurring theme on xkcd.

In the title text the results from the very bad approach is mentioned and the fact that they got the data they where looking for made clear when they state that We trained it to produce data that looked convincing, and we have to admit the results look convincing! So of course if they successfully ask the AI for data that supports their theory, in a way that looks convincing, that would be what they got back.

Transcript

- [Cueball is pointing a stick at a poster hanging behind him while addressing an unseen audience. There are two graphs on the poster with data points and fitting curves.]

- Cueball: We realized all our data is flawed.

- [The three next panels all have a label in a frame going over the top of each panels frame. The poster can no longer be seen in the rest of the panels. Cueball has taken the stick down.]

- Label: Good

- Cueball: ...So we're not sure about our conclusions.

- [Cueball holds the pointer almost as in the first panel.]

- Label: Bad

- Cueball: ...So we did lots of math and then decided our data is actually fine.

- [Cueball holds the pointer so it point upwards. Also he lifts his other hand a bit up.]

- Label: Very bad

- Cueball: ...So we trained an AI to generate better data.

Discussion

For the first time in a very long time I was the first to make an attempt at the main explanation. I guess this comic came out very late then? Or just late up on explain xkcd? Seems like the Monday comic first came up on Tuesday in many countries including those in Europe. But guess it was still Monday in the US, at least in the western parts? I hope this is not as bad an attempt as Cueball's research strategies in the last panel :-) --Kynde (talk) 07:05, 27 July 2021 (UTC)

- Isn't it related to a recently published article[1][2] about bias introduced into AI by humanly-biased data?

- Reports of bias in AI have been in the news for several years. Most notably, facial-recognition systems that are bad at distinguishing faces of black and brown people. Barmar (talk) 13:58, 27 July 2021 (UTC)

- "On two occasions I have been asked [by members of Parliament], 'Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?' I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question." - Charles Babbage ; note that century and half passed since that quote and people STILL somehow expect computer will be able to reach correct results based on wrong data. -- Hkmaly (talk) 17:23, 27 July 2021 (UTC)

- Reports of bias in AI have been in the news for several years. Most notably, facial-recognition systems that are bad at distinguishing faces of black and brown people. Barmar (talk) 13:58, 27 July 2021 (UTC)

This explanation has some good ideas for what these things mean, but it goes into them in excessive detail, which doesn't leave a lot of room for other ideas to be included side-by-side. I think that might be common. I was just thinking that there are a lot of ways extra math is used to produce worse conclusions: as soon as you have to work more to find what is good, occam's razor says you are less likely to be relevant. Similarly there are a lot of ways that AI is used to work with data, but its power greatly surpasses its ability to reflect the underlying meaning of things. For example, the existing data can be extended without being scrapped, and look completely real in every known respect, but that doesn't mean that any new information is included in what is generated, since the only data to work with is what was already there. 108.162.219.98 16:19, 27 July 2021 (UTC)

There are various techniques in Machine Learning to augment the training data, which can include generating fake data that looks like the real data; one such technique is using Generative adversarial network (GAN).

On first reading, I was thinking the Good approach would be to go out and run new experiments and measurements, incorporating the lessons from their flawed data, to avoid making the same mistakes again. This can be quite expensive, but it is really the only way to increase the validity of the data. Just saying "We can't trust our conclusions," throws away the opportunity to learn from earlier mistakes and come up with better measurements next time. Nutster (talk) 14:38, 28 July 2021 (UTC)

Is this a reference to Biogen? Doing some motivated post hoc subgroup analysis to get Aduhelm approved.

From what I know, the "very bad" approach is becoming common in data science, see the Wikipedia page for Synthetic_data#Synthetic_data_in_machine_learning or, when done on a single feature at a time, Imputation_(statistics). The reason imputation can be problematic because the data is missing due to some confounding variable, so trying to fill in based on existing values will bias the results. A slightly related example is for class imbalance, where some groups are underrepresented and therefore won't be predicted as accurately as overrepresented groups. Instead of gathering more data, especially more representative data, data scientists will often use something like SMOTE to generate more data. An example of a widely used but frankly bad synthetic dataset is kddcup99. 172.68.142.147 05:25, 11 August 2021 (UTC)

Super Bad: So we used a RNG to make completely random data

Ultra Bad: So I just picked my favorite numbers to use as data

The joke here, is, that adding the artificial intelligence variable is going to get things really screwed up!