2110: Error Bars

Explanation

| |

This explanation may be incomplete or incorrect: Created by an INFINITE SERIES OF ERROR BARS. Please mention here why this explanation isn't complete. Do NOT delete this tag too soon. If you can address this issue, please edit the page! Thanks. |

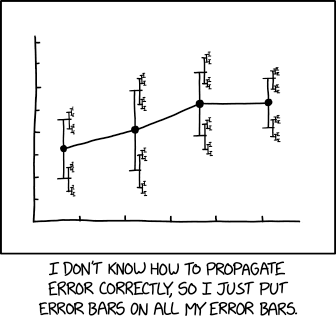

On statistical charts and graphs, it is common to include error bars showing the probable variation of the actual value from the value shown (or the possible error of the value shown). Since there is always uncertainty in any given measurement, the error bars help an observer evaluate how accurate the data shown is, or the implications if the true value is within the likely error, rather than the exact value shown. There are statistical methods for calculating error bars (they can show a standard deviation, a standard error, or a confidence interval) but the fact that there are multiple ways of calculating them - plus general unfamiliarity with statistical methods - means that people often misinterpret or misunderstand them.

In the comic, Randall is saying he is one of those people who do not understand error bars, specifically that he does not know how to calculate them correctly. As a result, he puts error bars on the ends of his error bars, to reflect the fact that the error may be greater or lesser than his first error bars show. However, since his second error bar calculations are also suspect, he puts a third set of error bars on them. This repeats ad infinitum creating a fractal similar to a Cantor set

In the title text, he states that the effect strength is 1.68 and follows it with the 95% confidence interval (a range of possible values which has a 95% estimated probability of containing the true value), which would normally be represented by something like "1.68 (95% CI 1.56 - 1.80)." Since he is stating that those bounds are uncertain, he starts with "1.68 (95% CI 1.56" but then puts the 95% CI for that lower bound of the interval, "95% CI 1.52," followed by the lower bound for that value, "95% CI 1.504," and so on. He goes 11 layers deep before resorting to an ellipsis.

Transcript

| |

This transcript is incomplete. Please help editing it! Thanks. |

A line graph...

Discussion

I put in a little thing about fractals and Cantor sets, seemed relevant. Netherin5 (talk) 17:32, 11 February 2019 (UTC)

- Fractals seem more relevant than the Cantor set, since the Cantor set is the limit as you infinitely subtract stuff from a finite interval, whereas this is adding (more like a geometric series).172.68.174.64 23:25, 12 February 2019 (UTC)

Would the series have a limit or would it continue on until the error bars go from infinity to +infinity?

It will have limit. Becouse it will every time be 5% of prevous errors it will lower over time. --172.68.154.40

https://repl.it/repls/AppropriateMatureConferences for demonstration --172.68.154.40

- Yes and no. The point is in fact that for calculating one sigma you need at least two points. It means that sigmas is always one less then points. And sigma on sigmas is less in mumber by one more. So you can't have recursion sigmas depth more then number of point minus one (you have to start with two). Comics is wrong in this one: it has four points and four levels (on graph), but it can have only 3.

Perhaps you should review Zeno's paradoxes PotatoGod (talk) 02:11, 12 February 2019 (UTC)

That's not my understanding of "propagating error". I understand that phrase to mean that you're taking a measured value (that has uncertainty) and plugging it into a formula / using it calculate another value. Because of the way this works, the (absolute & relative) error on the newly calculated value is likely to be larger or smaller than the error in the original value (the overall size of the error bars changes). Randall's joke is that, instead of calculating the new error bars, he calculates error bars on the ends of his existing bars. I also agree with Netherin5 that there's a clear fractal reference here.

162.158.79.113 17:45, 11 February 2019 (UTC) hagmanti

- I noticed this error too, and tried to correct it, but it sounds like you understand it better than I. Feel free to edit the article itself! 162.158.78.178 23:38, 11 February 2019 (UTC)

A question too, does the CI tend towards negative infinity, zero, or one? Edit: Today I learned what CI means. Netherin5 (talk) 18:01, 11 February 2019 (UTC)

He has confidence intervals in confidence intervals alone; despite this, you see, he lacks confidence in...he. GreatWyrmGold (talk) 20:48, 11 February 2019 (UTC)

Just to pair with the alt-text: ...))))))))))). 108.162.242.19 02:31, 13 February 2019 (UTC)

https://explainxkcd.com/859/ relevant --172.68.154.64

Good explanations but if I understand the comic correctly, the article does not really get to the point. It is indeed true that different modelling assumptions will give different confidence intervals, but a more mundane and more important source of uncertainty is statistical error (e.g., sampling error). CIs are typically used to convey the uncertainty around a point estimate (e.g., a mean) which has been computed from a random sample. If you take another random sample from the same population (e.g., perform an exact replication of an experiment), you will get a different mean, but also a different CI. See Cumming's dance of p-values and CIs for an illustration: https://www.youtube.com/watch?v=5OL1RqHrZQ8, or a talk I gave that covers a larger range of statistics: https://www.youtube.com/watch?v=UKX9iN0p5_A. In my talk I explain why it doesn't make sense to report inferential statistics (p-values, CIs, etc) with many significant digits, because you could have easily obtained very different p-values or CIs. The belief that inferential statistics are "stable" across replications is a very common misconception that can easily lead of erroneous inferences. So if you care about your statistical analyses being interpreted correctly, it is tempting to show the uncertainty around all the inferential statistics you report, including CI limits, as Monroe is suggesting. Like any statistics, CI limits are a function of the data and thus have a sampling distribution (https://statmodeling.stat.columbia.edu/2016/08/05/the-p-value-is-a-random-variable/). Thus you can estimate the standard deviation of this sampling distribution, and this gives you the standard error of the confidence limit. There is one inaccuracy in the comic (I think): you can't define CIs on CI limits, because there is no true population value of CI limits. However you can compute standard errors of CI limits, or alternatively prediction intervals, and then compute standard errors and prediction intervals again and again, recursively. If my explanation makes any sense I can try to summarize it and incorporate it in the article. Dragice (talk) 10:47, 13 February 2019 (UTC)

I doubt this was intended but what was brought to my mind -- and then what I couldn't get out of my head until I created one specifically for this comic -- was this old meme: Yo Dawg, I heard you like error bars.... Thought it worth contributing! :) 172.68.47.114 16:56, 24 September 2019 (UTC)Brian