Difference between revisions of "Main Page"

(Missed one) |

|||

| Line 8: | Line 8: | ||

and only {{#expr:{{LATESTCOMIC}}-({{PAGESINCAT:Comics|R}}-10)}} | and only {{#expr:{{LATESTCOMIC}}-({{PAGESINCAT:Comics|R}}-10)}} | ||

({{#expr: ({{LATESTCOMIC}}-({{PAGESINCAT:Comics|R}}-10)) / {{LATESTCOMIC}} * 100 round 0}}%) | ({{#expr: ({{LATESTCOMIC}}-({{PAGESINCAT:Comics|R}}-10)) / {{LATESTCOMIC}} * 100 round 0}}%) | ||

| − | remain. '''[[Help:How to add a new comic explanation|Add yours]]''' while there's a chance! | + | [[List of unexplained comics|remain]]. '''[[Help:How to add a new comic explanation|Add yours]]''' while there's a chance! |

</center> | </center> | ||

== Latest comic == | == Latest comic == | ||

Revision as of 13:45, 4 May 2013

Welcome to the explain xkcd wiki!

We have collaboratively explained 5 xkcd comics, and only 2920 (100%) remain. Add yours while there's a chance!

Latest comic

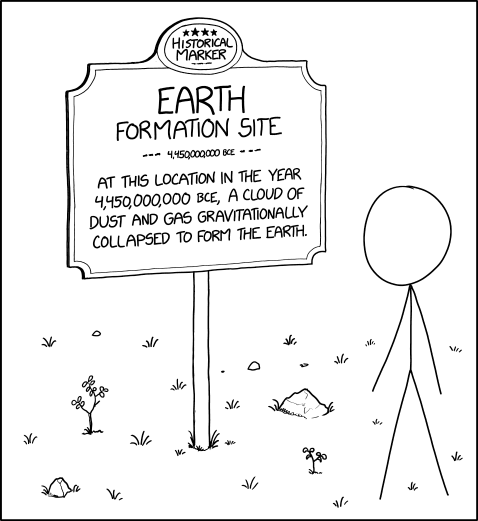

| Earth Formation Site |

Title text: It's not far from the sign marking the exact latitude and longitude of the Earth's core. |

Explanation

| |

This explanation may be incomplete or incorrect: Created by a BLUE PLAQUE COMMITTEE - Please change this comment when editing this page. Do NOT delete this tag too soon. |

In this comic, Cueball stands in front of a sign that declares itself to be a historical location. Typically, these signs are placed at precise locations where historical and even mythological events happened (such as where battles have been fought, or where people of note were born, or resided, or accomplished something, or died). In some cases, multiple locations lay "claim" to events whose true locations are uncertain (or, of course, when events span multiple locations, such as where people resided). However, the event in question on the sign is the formation of the Earth, which cannot be pinned down to one precise location on Earth, and therefore this sign could be placed just as well anywhere on Earth.

It may also be of interest to note that due to the Sun's 225-million year long orbit around the center of the Milky Way galaxy, the sign is misleading, as the exact location at the time of formation within the galaxy is unclear. There's also the matter of the movement of the galaxy itself relative to other objects. A marker such as this one assumes some frame of reference, which may be uncertain or unspecified.

The date on the sign is also ridiculously precise, in keeping with the information usually found on historical markers but absurd in the context of the tens or hundreds of millions of years thought to be required for planet formation.

The title text refers to the 'coordinates of the Earth's core'. Since all coordinates, when superimposed on a globe, converge at the Earth's core, this reinforces the idea that no singular location can be picked as the exact location where the Earth formed.

Transcript

| |

This transcript is incomplete. Please help editing it! Thanks. |

(Cueball is standing in front of a sign in a field of grass. The sign reads:)

HISTORICAL MARKER

EARTH FORMATION SITE

~ 4,450,000,000 BCE ~

At this location in the year 4,450,000,000 BCE, a cloud of dust and gas gravitationally collapsed to form the Earth.

Is this out of date? .

New here?

Last 7 days (Top 10) |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

You can read a brief introduction about this wiki at explain xkcd. Feel free to sign up for an account and contribute to the wiki! We need explanations for comics, characters, themes, memes and everything in between. If it is referenced in an xkcd web comic, it should be here.

- If you're new to wikis like this, take a look at these help pages describing how to navigate the wiki, and how to edit pages.

- Discussion about various parts of the wiki is going on at Explain XKCD:Community portal. Share your 2¢!

- List of all comics contains a complete table of all xkcd comics so far and the corresponding explanations. The missing explanations are listed here. Feel free to help out by creating them! Here's how.

Rules

Don't be a jerk. There are a lot of comics that don't have set in stone explanations; feel free to put multiple interpretations in the wiki page for each comic.

If you want to talk about a specific comic, use its discussion page.

Please only submit material directly related to —and helping everyone better understand— xkcd... and of course only submit material that can legally be posted (and freely edited.) Off-topic or other inappropriate content is subject to removal or modification at admin discretion, and users who repeatedly post such content will be blocked.

If you need assistance from an admin, post a message to the Admin requests board.