Difference between revisions of "Main Page"

(Trimming it down a bit.) |

|||

| Line 3: | Line 3: | ||

<font size=5px>''Welcome to the '''explain [[xkcd]]''' wiki!''</font> | <font size=5px>''Welcome to the '''explain [[xkcd]]''' wiki!''</font> | ||

| − | We have an explanation for all [[:Category:Comics|'''{{#expr:{{PAGESINCAT:Comics|R}}- | + | We have an explanation for all [[:Category:Comics|'''{{#expr:{{PAGESINCAT:Comics|R}}-9}}''' xkcd comics]], |

| − | <!-- Note: the - | + | <!-- Note: the -9 in the calculation above is to discount subcategories (there are 8 of them as of 2013-02-27), |

| − | as well as [[List of all | + | as well as [[List of all comics]], which is obviously not a comic page. --> |

and only {{PAGESINCAT:Incomplete articles|R}} | and only {{PAGESINCAT:Incomplete articles|R}} | ||

({{#expr: {{PAGESINCAT:Incomplete articles|R}} / {{LATESTCOMIC}} * 100 round 0}}%) [[:Category:Incomplete articles|are incomplete]]. Help us finish them! | ({{#expr: {{PAGESINCAT:Incomplete articles|R}} / {{LATESTCOMIC}} * 100 round 0}}%) [[:Category:Incomplete articles|are incomplete]]. Help us finish them! | ||

Revision as of 02:01, 20 June 2013

Welcome to the explain xkcd wiki!

We have an explanation for all 6 xkcd comics, and only 0 (0%) are incomplete. Help us finish them!

Latest comic

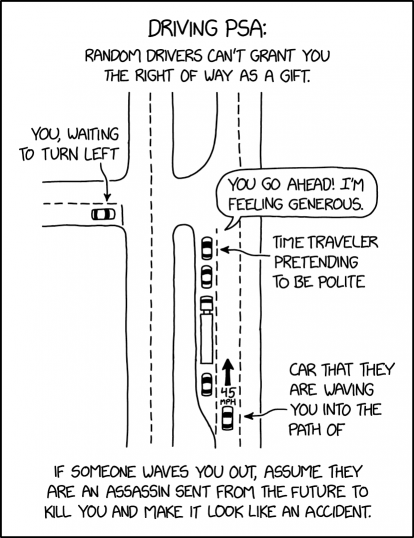

| Driving PSA |

Title text: This PSA brought to you by several would-be assassins who tried to wave me in front of speeding cars in the last month and who will have to try harder next time. |

Explanation

| |

This explanation may be incomplete or incorrect: Created by a CLUELESS BOT DRIVING A 72.43048KM/H - Please change this comment when editing this page. Do NOT delete this tag too soon. |

A PSA is a Public Service Announcement. This comic is saying that when people who have the right of way try to wave you through busy traffic, they are actively trying to kill you and run you over, as they have the right of way and can blame you for any subsequent deaths and or injuries.

Transcript

| |

This transcript is incomplete. Please help editing it! Thanks. |

Driving PSA: Random drivers can’t grant you the right of way as a gift. [An H-road intersection] You, waiting to turn left You go ahead! I’m feeling generous. Time traveler pretending to be polite 45 MPH Car that they are waving you into the path of If someone waves you out, assume that they are an assassin sent from the future to kill you and make it look like an accident.

Is this out of date? .

New here?

Last 7 days (Top 10) |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

You can read a brief introduction about this wiki at explain xkcd. Feel free to sign up for an account and contribute to the wiki! We need explanations for comics, characters, themes, memes and everything in between. If it is referenced in an xkcd web comic, it should be here.

- If you're new to wikis like this, take a look at these help pages describing how to navigate the wiki, and how to edit pages.

- Discussion about various parts of the wiki is going on at Explain XKCD:Community portal. Share your 2¢!

- List of all comics contains a complete table of all xkcd comics so far and the corresponding explanations. The missing explanations are listed here. Feel free to help out by creating them! Here's how.

Rules

Don't be a jerk. There are a lot of comics that don't have set in stone explanations; feel free to put multiple interpretations in the wiki page for each comic.

If you want to talk about a specific comic, use its discussion page.

Please only submit material directly related to —and helping everyone better understand— xkcd... and of course only submit material that can legally be posted (and freely edited.) Off-topic or other inappropriate content is subject to removal or modification at admin discretion, and users who repeatedly post such content will be blocked.

If you need assistance from an admin, post a message to the Admin requests board.