2451: AI Methodology

Explanation

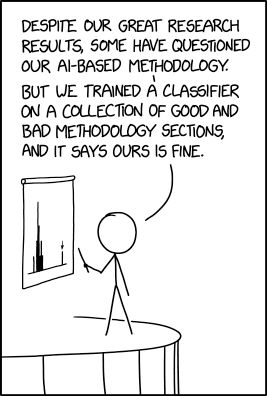

The joke in this comic is that the people are using artificial intelligence (AI) without understanding how to, and that by doing this the research concerned is at best unreliable and possibly deliberately compromised. The researchers acknowledge that their approach is risky and requires extra verification, but repeatedly use equally or more unreliable AI-based solutions to these problems. Therefore, their problems are likely as bad as they ever were and any other team using one of their verification tools is likely to experience similar unreliability. For an introduction to machine learning, you can visit https://fast.ai/ .

Original research

The first comment, that "some have questioned our AI-based methodology", refers to difficulty verifying the correctness of AI-based processing. A model (a program which solves a problem with AI-based statistical analysis) may appear reliable when it is instead insufficiently tested. Models are liable to experience issues due to lingering influences from its training data or a bad algorithm reducing the quality of the investigation. It is therefore necessary for research using such models to demonstrate that those models have been tested well enough that their results are likely to be useful. Frequently, additional tests are performed after training to confirm that the model can handle data collected in a different way to the data used to train it.

Classifier of methodology quality

Cueball seeks to reassure his audience by quantifying the quality of his methodology. He does this by creating yet another AI to rank methodologies. This approach is unlikely to instill confidence for a variety of reasons:

- The quality AI and original research AI were written by the same team. If the original research AI was ill-designed, the quality AI probably shares design problems with it.

- The specific kind of model created is unlikely to be the correct one. Cueball calls this a classifier, which is frequently a type of model which assigns an input into distinct mutually exclusive categories. For example, a classifier might be used to determine what language a chunk of text is, given that the chunk is in only one language. However, quality is a continuous aspect of the data. A classifier of methodologies is likely to sort them into "bad", "mediocre", and "good" categories, whereas an effective model should have the ability to give more precise grades. The choice of a classifier may indicate that Cueball doesn't know which types of models to use.

- The training data for this quality AI is not mentioned. If, for example, the team's previous research is used as examples of good methodologies, the AI is likely to judge all methodologies from them as good as well.

- A methodology section refers to quality of writing and is a specific section of a research paper. A good methodology section would accurately and clearly explain what he did, but does not mean the research methodology itself was valid. Cueball doesn't indicate whether he believes his model is trying to analyze the quality of the methodology described, but in any case this is nearly impossible for existing machine learning.

- An AI which attempts to judge a methodology section is receiving a great deal of input which is difficult to process. It would have to use natural language processing to understand the writing in the methodology section and would also require a lot of specialized knowledge about the subject matter to judge the quality. This would require artificial general intelligence (AGI), which has not yet been achieved. Since the AI does not have the ability to fully understand complex research, it will likely use unimportant details to judge the methodologies.

- The ranking AI heavily favors the methodology of Cueball's AI, and may be biased. It shows a normal distribution, with a singular outlier to the far right with an arrow above. It can be inferred (from the arrow) that this data-point represents the AI's methodology. It is a significant outlier, and as such it is probably not an accurate representation of Cueball's AI. Alternatively, this could be taken as AI 'nepotism', where Cueball's methodology AI is more likely to select AI-based approaches over others. This type of algorithmic bias is mentioned in 2237: AI Hiring Algorithm.

Spacing AI (from title text)

While there are many red flags in the original AI and quality AI, it is theoretically possible that they operate as Cueball claims. The title text's comments about spacing and diacritics prove that this is not the case and that the quality AI, at least, is completely broken. AI models are given input in various complex ways and determine based on statistical analysis which details are important. Such models can easily find details in the training data which correlate with correct answers but make the resulting model useless.

For example, a research team once created a model which was given medical information to determine how likely a patient was to have cancer. The model was trained on existing patient records and the team planned to use it on new patients. However, the original model did not use the medical information but instead simply checked the name of the hospital--a patient at a hospital with "cancer center" in the name was likely to have cancer. The model had identified a data point which correlated with the desired answer, but this correlation was not useful for the intended purpose. The model concerned was discarded and a new one created without the hospital name.

In this case, the methodology sections are text written by humans, which can contain various artifacts of the writing process. These can include details like how the user chose to insert spaces, word usage, spelling, or diacritic marks which are optional in English (e.g. naive versus naïve). It appears that the training information identifies certain patterns which correlate with "good" methodologies. This indicates a few more problems for this research team:

- Their AI is using pointless details to decide on the quality of methodology sections, so it is useless.

- They haven't recognized that it's useless, so their other AI is probably fatally flawed.

- The spacing information is correlated strongly with good methodology, which implies that they probably don't have very many different sources for their training data. Their sample size is too small and the AI, even if it was improved to ignore this information, needs more data to have a chance at being useful.

Transcript

- [Cueball is standing on a podium in front of a projection on a screen and points with a stick to a bar chart histogram with a bell curve to the left and a single bar to the far right marked with an arrow.]

- Cueball: Despite our great research results, some have questioned our AI-based methodology.

- Cueball: But we trained a classifier on a collection of good and bad methodology sections, and it says ours is fine.

Discussion

I checked with severαl bots, & replαcing eαch instαnce of "a" with "α" in α mid-length pαssαge of text seems enough to sαtisfy most unicity requirements. (~~ unsigned by ProphetZarquon ~~)

- But then the spell-checkers (AI-based or not) start screaming about the unknown words. Nutster (talk) 09:14, 17 April 2021 (UTC)

An alternate explanation would be the AI's have reached Singularity and are conspiring to say that all work, as a conscious effort, despite the quality of data. "Don't worry; be happy." Nutster (talk) 09:14, 17 April 2021 (UTC)

- I think it's a spoof of the recent reports of things like facial recognition systems that have trouble with minorities. Or Google/YouTube recommendation algorithms that show the user sites that confirm their biases. Barmar (talk) 12:59, 17 April 2021 (UTC)

i think the methodology ai is dodgy and has inbuilt preferences to pick other ai options over others, regardless of their validity. kinda like ai nepotism (~~ unsigned by 141.101.98.174 ~~)

I think it’s interesting that no one has thought to define AI, as if everybody should know what this means! (~~ unsigned by 172.69.35.175 ~~)

- Artificial Intelligence. And yes, everyone DOES kind of know what it is Hiihaveanaccount (talk) 14:02, 19 April 2021 (UTC)

I think the first paragraph (“The joke is...”) is not justified. Too many details that cannot be inferred from the comic, even using AI. (~~ unsigned by 141.101.69.109 ~~)

- My AI infers that the joke is a toaster... 141.101.98.16 21:22, 17 April 2021 (UTC) (PS, what is it with everyone not bothering to sign things?)

Methodology and methodology section likely refer to distinct things. Methodology section is part of the research paper, while methodology refers to how the research was actually performed. 108.162.241.160 09:02, 19 April 2021 (UTC)

I have rewritten most of the explanation to better describe AI-related concepts. I would appreciate edits or comments if anything is unclear. I have tried to define all terms, but as I am familiar with them, I may be using jargon too much. 172.69.170.50 04:36, 21 April 2021 (UTC)

Am I high or is the graph actually a map of the click and drag comic?